Cluster Managers in Spark

The agenda of this tutorial is to understand what a cluster manager is, and its role, and the cluster managers supported in Apache Spark.

What is a cluster ?

A cluster is a set of tightly or loosely coupled computers connected through LAN (Local Area Network). The computers in the cluster are usually called nodes. Each node in the cluster can have a separate hardware and Operating System or can share the same among them. Resource (Node) management and task execution in the nodes is controlled by a software called Cluster Manager.

What does a cluster manager do in Apache Spark cluster ?

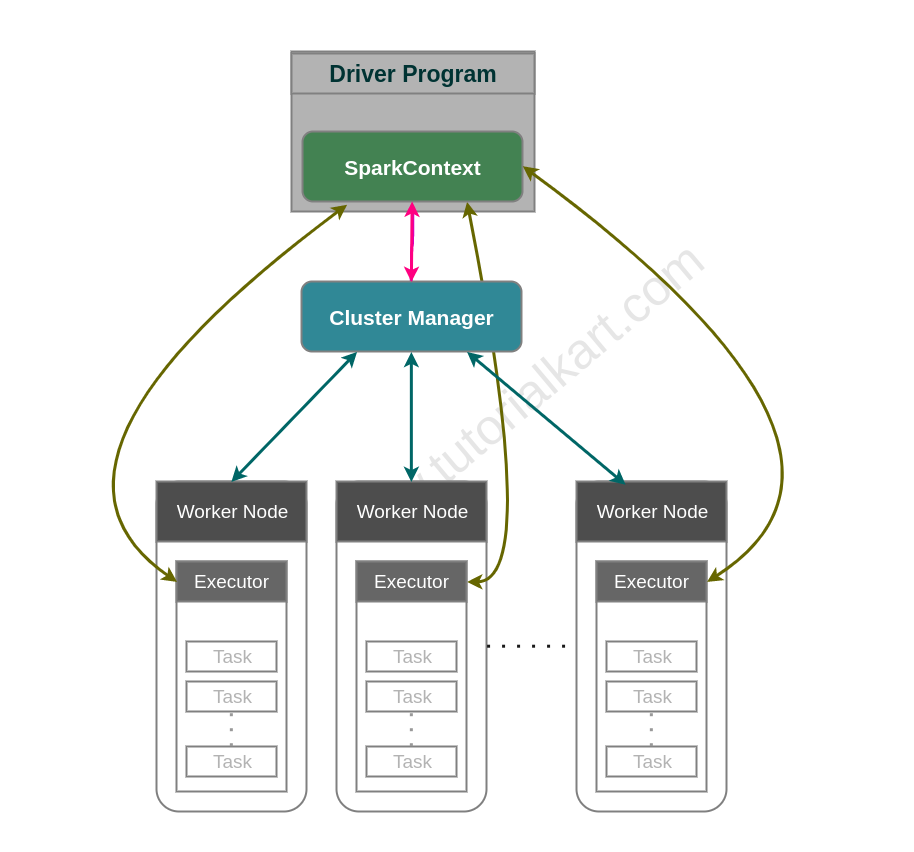

The spark application contains a main program (main method in Java spark application), which is called driver program. Driver program contains an object of SparkContext. SparkContext could be configured with information like executors’ memory, number of executors, etc. Cluster Manager keeps track of the available resources (nodes) available in the cluster. When SparkContext object is created, it connects to the cluster manager to negotiate for executors. From the available nodes, cluster manager allocates some or all of the executors to the SparkContext based on the demand. Also, please note that multiple spark applications could be run on a single cluster. However the procedure is same, SparkContext of each spark application requests cluster manager for executors. In a nutshell, cluster manager allocates executors on nodes, for a spark application to run.

Cluster managers supported in Apache Spark

Following are the cluster managers available in Apache Spark.

Spark Standalone Cluster Manager

Standalone cluster manager is a simple cluster manager that comes included with the Spark.

Apache Mesos

Apache Mesos is a general cluster manager that can also run Hadoop MapReduce and service applications.

Hadoop YARN

Hadoop YARN is the resource manager in Hadoop 2.

Conclusion

In this Apache Spark Tutorial, we have learnt about the cluster managers available in Spark and how a spark application could be launched using these cluster managers.