Load data from JSON data source and execute Spark SQL query

Apache Spark Dataset and DataFrame APIs provides an abstraction to the Spark SQL from data sources. Dataset provides the goodies of RDDs along with the optimization benefits of Spark SQL’s execution engine.

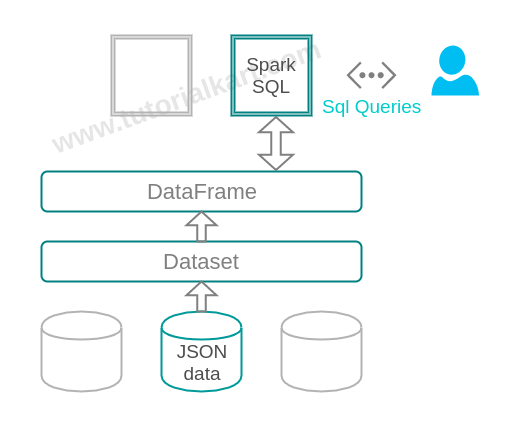

Dataset loads JSON data source as a distributed collection of data. DataFrame is Dataset with data arranged into named columns. The architecture containing JSON data source, Dataset, Dataframe and Spark SQL is shown below :

Load data from JSON file and execute SQL query

Following is a step-by-step process to load data from JSON file and execute SQL query on the loaded data from JSON file.

1. Create a Spark Session

Provide application name and set master to local with two threads.

SparkSession spark = SparkSession

.builder()

.appName("Java Spark SQL data source JSON example")

.master("local[2]")

.getOrCreate(); 2. Read JSON data source

SparkSession.read().json(String path) can accept either a single text file or a directory storing text files, and load the data to Dataset.

Dataset<Row> people = spark.read().json("path-to-json-files"); 3. Create a temporary view using the DataFrame

people.createOrReplaceTempView("people"); 4. Run SQL query

Temporary view could be considered as a table and attributes under schema root as columns

root |-- name: string (nullable = true) |-- salary: long (nullable = true)

Table : people Columns : name, salary

Dataset<Row> namesDF = spark.sql("SELECT name FROM people WHERE salary>3500 ");

namesDF.show(); 5. Stop spark session

Complete java program to load data from JSON file and execute SQL query in given below:

data/sql/json/people.json

{"name":"Michael", "salary":3000}

{"name":"Andy", "salary":4500}

{"name":"Justin", "salary":3500}

{"name":"Berta", "salary":4000}

{"name":"Raju", "salary":3000}

{"name":"Chandy", "salary":4500}

{"name":"Joey", "salary":3500}

{"name":"Mon", "salary":4000}

{"name":"Rachel", "salary":4000} JSONsqlExample.java

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SparkSession;

public class JSONsqlExample {

public static void main(String[] args) {

SparkSession spark = SparkSession

.builder()

.appName("Java Spark SQL data source JSON example")

.master("local[2]")

.getOrCreate();

// A JSON dataset is pointed to by path.

// The path can be either a single text file or a directory storing text files

Dataset<Row> people = spark.read().json("data/sql/json/");

// The inferred schema can be visualized using the printSchema() method

System.out.println("Schema\n=======================");

people.printSchema();

// Creates a temporary view using the DataFrame

people.createOrReplaceTempView("people");

// SQL statements can be run by using the sql methods provided by spark

Dataset<Row> namesDF = spark.sql("SELECT name FROM people WHERE salary>3500 ");

System.out.println("\n\nSQL Result\n=======================");

namesDF.show();

// stop spark session

spark.stop();

}

} Output

Schema ======================= root |-- name: string (nullable = true) |-- salary: long (nullable = true) SQL Result ======================= +------+ | name| +------+ | Andy| | Berta| |Chandy| | Mon| |Rachel| +------+

Conclusion

In this Apache Spark Tutorial, we have learnt to load a json file into Dataset and access the data using SQL queries through Spark SQL.