Spark Shell is an interactive shell through which we can access Spark’s API. Spark provides the shell in two programming languages : Scala and Python.

In this tutorial, we shall learn the usage of Scala Spark Shell with a basic word count example.

Prerequisites

It is assumed that you already installed Apache Spark on your local machine. If not, please refer Install Spark on Ubuntu or Install Spark on MacOS.

Hands on Scala Spark Shell

Start Spark interactive Scala Shell

To start Scala Spark shell open a Terminal and run the following command.

$ spark-shellFor the word-count example, we shall start with option --master local[4] meaning the spark context of this spark shell acts as a master on local node with 4 threads.

$ spark-shell --master local[4]If you accidentally started spark shell without options, kill the shell instance.

~$spark-shell --master "local[4]"

Using Sparks default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

17/11/12 13:07:31 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/11/12 13:07:31 WARN Utils: Your hostname, tutorialkart resolves to a loopback address: 127.0.0.1; using 192.168.0.104 instead (on interface wlp7s0)

17/11/12 13:07:31 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

17/11/12 13:07:41 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

Spark context Web UI available at http://192.168.0.104:4040

Spark context available as 'sc' (master = local[4], app id = local-1510472252847).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.2.0

/_/

Using Scala version 2.11.8 (OpenJDK 64-Bit Server VM, Java 1.8.0_151)

Type in expressions to have them evaluated.

Type :help for more information.

scala> From the above Shell startup, following points could be made

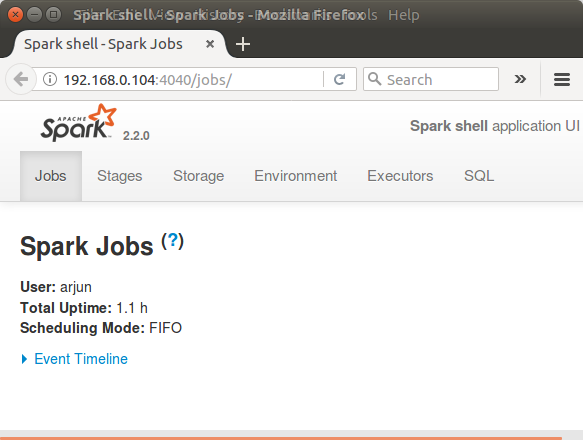

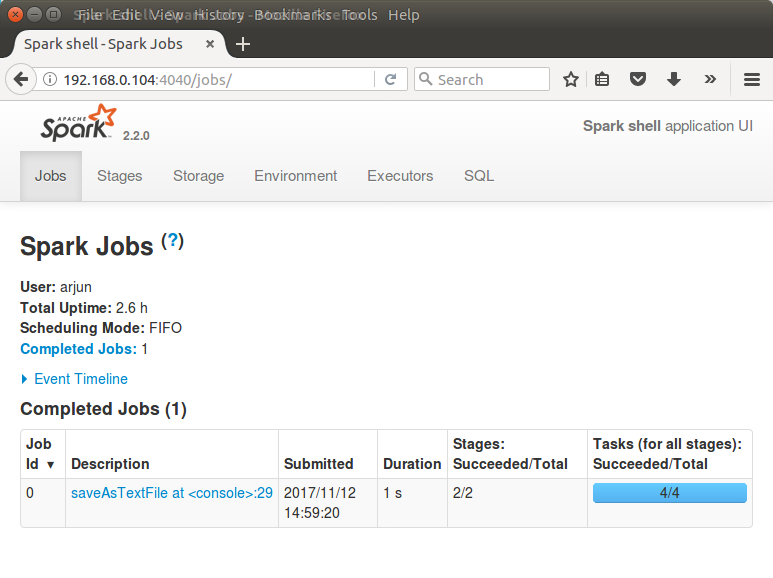

Spark context Web UI is available at http://192.168.0.104:4040 . Open a browser and hit the url.

Spark context available as sc, meaning you may access the spark context in the shell as variable named ‘sc’.

Spark session available as spark, meaning you may access the spark session in the shell as variable named ‘spark’.

Word-Count Example with Spark (Scala) Shell

Following are the three commands that we shall use for Word Count Example in Spark Shell :

/** map */

var map = sc.textFile("/path/to/text/file").flatMap(line => line.split(" ")).map(word => (word,1));

/** reduce */

var counts = map.reduceByKey(_ + _);

/** save the output to file */

counts.saveAsTextFile("/path/to/output/")Map

In this step, using Spark context variable, sc, we read a text file.

sc.textFile("/path/to/text/file")then we split each line using space " " as separator.

flatMap(line => line.split(" "))and we map each word to a tuple (word, 1), 1 being the number of occurrences of word.

map(word => (word,1))We use the tuple (word,1) as (key, value) in reduce stage.

Reduce

We reduce all the words based on Key

var counts = map.reduceByKey(_ + _);Save counts to local file

The counts could be saved to local file.

counts.saveAsTextFile("/path/to/output/")When you run all the commands in a Terminal, Spark Shell looks like:

scala> var map = sc.textFile("/home/arjun/data.txt").flatMap(line => line.split(" ")).map(word => (word,1));

map: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[5] at map at <console>:24

scala> var counts = map.reduceByKey(_ + _);

counts: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[6] at reduceByKey at <console>:26

scala> counts.saveAsTextFile("/home/arjun/output/");

scala> You can verify the output of word count.

$ ls

part-00000 part-00001 _SUCCESS Sample of the contents of output file, part-00000, is shown below :

/home/arjun/output$cat part-00000

(branches,1)

(sent,1)

(mining,1)

(tasks,4)We have successfully counted unique words in a file with Word Count example run on Scala Spark Shell.

You may use Spark Context Web UI to check the details of the Job (Word Count) that we have just run.

Navigate through other tabs to get an idea of Spark Web UI and the details about the Word Count Job.

Spark Shell Suggestions

Suggestions

Spark Shell can provide suggestions. Type part of the command and click on ‘Tab’ key for suggestions.

scala> counts.sa

sample sampleByKeyExact saveAsHadoopFile saveAsNewAPIHadoopFile saveAsSequenceFile

sampleByKey saveAsHadoopDataset saveAsNewAPIHadoopDataset saveAsObjectFile saveAsTextFile Kill the Spark Shell Instance

To kill the spark shell instance, hit Control+Z on the current shell and kill the spark instance using process id, pid, and with the help of kill command.

Find pid :

~$ ps -aef|grep spark

arjun 8895 8113 0 13:01 pts/16 00:00:00 bash /usr/lib/spark/bin/spark-shell

arjun 8906 8895 91 13:01 pts/16 00:01:13 /usr/lib/jvm/default-java/jre/bin/java -cp /usr/lib/spark/conf/:/usr/lib/spark/jars/* -Dscala.usejavacp=true -Xmx1g org.apache.spark.deploy.SparkSubmit --class org.apache.spark.repl.Main --name Spark shell spark-shell

arjun 9106 8113 0 13:03 pts/16 00:00:00 grep --color=auto sparkIn this case, 8906 is the pid.

Kill the instance using pid :

~$ kill -9 8906Conclusion

In this Apache Spark Tutorial – Scala Spark Shell, we have learnt the usage of Spark Shell using Scala programming language with the help of Word Count Example.