Spark RDD reduce()

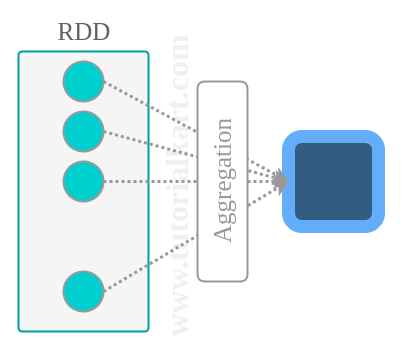

In this Spark Tutorial, we shall learn to reduce an RDD to a single element. Reduce is an aggregation of elements using a function.

Following are the two important properties that an aggregation function should have

- Commutative A+B = B+A – ensuring that the result would be independent of the order of elements in the RDD being aggregated.

- Associative (A+B)+C = A+(B+C) – ensuring that any two elements associated in the aggregation at a time does not effect the final result.

Examples of such function are Addition, Multiplication, OR, AND, XOR, XAND.

Syntax of RDD.reduce()

The syntax of RDD reduce() method is

RDD.reduce(<function>)<function> is the aggregation function. It could be passed as an argument or you may use lambda function to define the aggregation function.

Java Example – Spark RDD reduce()

In this example, we will take an RDD of Integers and reduce them to their sum using RDD.reduce() method.

RDDreduceExample.java

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

public class RDDreduceExample {

public static void main(String[] args) {

// configure spark

SparkConf sparkConf = new SparkConf().setAppName("Read Text to RDD")

.setMaster("local[2]").set("spark.executor.memory","2g");

// start a spark context

JavaSparkContext sc = new JavaSparkContext(sparkConf);

// read text file to RDD

JavaRDD<Integer> numbers = sc.parallelize(Arrays.asList(14,21,88,99,455));

// aggregate numbers using addition operator

int sum = numbers.reduce((a,b)->a+b);

System.out.println("Sum of numbers is : "+sum);

}

}Run the above Spark Java application, and you would get the following output in console.

17/11/29 11:26:42 INFO DAGScheduler: ResultStage 0 (reduce at RDDreduceExample.java:20) finished in 0.330 s

17/11/29 11:26:43 INFO DAGScheduler: Job 0 finished: reduce at RDDreduceExample.java:20, took 0.943121 s

Sum of numbers is : 677

17/11/29 11:26:43 INFO SparkContext: Invoking stop() from shutdown hookPython Example – Spark RDD reduce()

In this example, we will implement the same use case of reducing integers in RDD to their sum, but we shall do that using Python.

import sys

from pyspark import SparkContext, SparkConf

if __name__ == "__main__":

# create Spark context with Spark configuration

conf = SparkConf().setAppName("Read Text to RDD - Python")

sc = SparkContext(conf=conf)

# read input text file to RDD

numbers = sc.parallelize([1,7,8,9,5,77,48])

# aggregate RDD elements using addition function

sum = numbers.reduce(lambda a,b:a+b)

print "sum is : " + str(sum)Run the above Spark RDD reduce Python Example using spark-submit

$ spark-submit spark-rdd-reduce-example.pyYou will get the following output in console.

17/11/29 11:39:06 INFO DAGScheduler: ResultStage 0 (reduce at /home/arjun/workspace/spark/spark-rdd-reduce-example.py:15) finished in 0.960 s

17/11/29 11:39:06 INFO DAGScheduler: Job 0 finished: reduce at /home/arjun/workspace/spark/spark-rdd-reduce-example.py:15, took 1.552233 s

sum is : 155

17/11/29 11:39:06 INFO SparkContext: Invoking stop() from shutdown hookConclusion

In this Spark Tutorial, we learned the syntax and examples for RDD.reduce() method.